openstack API部分(Keystone) HAProxy配置(二)

openstack API部分(Keystone) HAProxy配置

廖杰

一、概况与原理

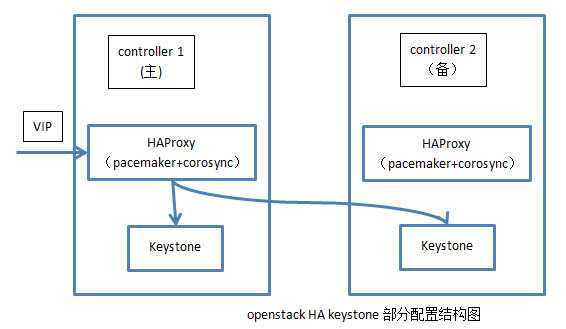

1)所需要的配置组件有:pacemaker+corosync+HAProxy

2)主要原理:HAProxy作为负载均衡器,将对openstack api服务的请求分发到两个镜像的控制节点上,由于openstack api服务是无状态的服务,所以不存在数据同步的问题。具体为在pacemaker中配置一个VIP,HAProxy负责监听这个VIP,将对这个VIP的请求分发到两台控制节点上,同时HAProxy本身作为pacemaker的资源实现高可用性。另外,需在openstack中修改API服务的endpoint为VIP,同时对于服务的调用地址改为VIP。

3)目前只配置了keystone部分,其他部分情况类似。

(以下为具体配置步骤)

二、安装与配置HAProxy

1、调整内核参数,允许绑定VIP:

vim /etc/sysctl.conf

【内容】

net.ipv4.ip_nonlocal_bind=1

sysctl -p

2、安装HAProxy:

【源码安装】

wget -c http://haproxy.1wt.eu/download/1.4/src/haproxy-1.4.23.tar.gz

【备用链接:http://down1.chinaunix.net/distfiles/haproxy-1.4.21.tar.gz】

tar zxvf haproxy-1.4.23.tar.gz

cd haproxy-1.4.23

make TARGET=linux26 #如果是32位机器,则make TARGET=linux26 ARCH=i386

make install

mkdir -p /etc/haproxy

cp examples/haproxy.cfg /etc/haproxy/haproxy.cfg【可以跳过】

【yum安装】

yum install haproxy

3、配置HAProxy:

vim /etc/haproxy/haproxy.cfg

【内容】

global

daemon

defaults

mode http

maxconn 10000

timeout connect 10s

timeout client 10s

timeout server 10s

frontend keystone-admin-vip

bind 10.10.102.45:35357

default_backend keystone-admin-api

frontend keystone-public-vip

bind 10.10.102.45:5000

default_backend keystone-public-api

frontend quantum-vip

bind 10.10.102.45:9696

default_backend quantum-api

frontend glance-vip

bind 10.10.102.45:9191

default_backend glance-api

frontend glance-registry-vip

bind 10.10.102.45:9292

default_backend glance-registry-api

frontend nova-ec2-vip

bind 10.10.102.45:8773

default_backend nova-ec2-api

frontend nova-compute-vip

bind 10.10.102.45:8774

default_backend nova-compute-api

frontend nova-metadata-vip

bind 10.10.102.45:8775

default_backend nova-metadata-api

frontend cinder-vip

bind 10.10.102.45:8776

default_backend cinder-api

backend keystone-admin-api

balance roundrobin

server mesa-virt-01 10.10.102.6:35357 check inter 10s

server mesa-virt-02 10.10.102.7:35357 check inter 10s

backend keystone-public-api

balance roundrobin

server mesa-virt-01 10.10.102.6:5000 check inter 10s

server mesa-virt-02 10.10.102.7:5000 check inter 10s

backend quantum-api

balance roundrobin

server mesa-virt-01 10.10.102.6:9696 check inter 10s

server mesa-virt-02 10.10.102.7:9696 check inter 10s

backend glance-api

balance roundrobin

server mesa-virt-01 10.10.102.6:9191 check inter 10s

server mesa-virt-02 10.10.102.7:9191 check inter 10s

backend glance-registry-api

balance roundrobin

server mesa-virt-01 10.10.102.6:9292 check inter 10s

server mesa-virt-02 10.10.102.7:9292 check inter 10s

backend nova-ec2-api

balance roundrobin

server mesa-virt-01 10.10.102.6:8773 check inter 10s

server mesa-virt-02 10.10.102.7:8773 check inter 10s

backend nova-compute-api

balance roundrobin

server mesa-virt-01 10.10.102.6:8774 check inter 10s

server mesa-virt-02 10.10.102.7:8774 check inter 10s

backend nova-metadata-api

balance roundrobin

server mesa-virt-01 10.10.102.6:8775 check inter 10s

server mesa-virt-02 10.10.102.7:8775 check inter 10s

backend cinder-api

balance roundrobin

server mesa-virt-01 10.10.102.6:8776 check inter 10s

server mesa-virt-02 10.10.102.7:8776 check inter 10s

4、HAProxy的启动管理脚本:

vim /etc/init.d/haproxy

【内容】

# cat /etc/init.d/haproxy

#!/bin/bash

#

# chkconfig: 2345 85 15

# description: HA-Proxy is a TCP/HTTP reverse proxy which is particularly suited \

# for high availability environments.

# processname: haproxy

# config: /etc/haproxy.cfg

# pidfile: /var/run/haproxy.pid

# Source function library.

if [ -f /etc/init.d/functions ]; then

. /etc/init.d/functions

elif [ -f /etc/rc.d/init.d/functions ] ; then

. /etc/rc.d/init.d/functions

else

exit 0

fi

CONF_FILE="/etc/haproxy/haproxy.cfg"

HAPROXY_BINARY="/usr/local/sbin/haproxy"

PID_FILE="/var/run/haproxy.pid"

# Source networking configuration.

. /etc/sysconfig/network

# Check that networking is up.

[ ${NETWORKING} = "no" ] && exit 0

[ -f ${CONF_FILE} ] || exit 1

RETVAL=0

start() {

$HAPROXY_BINARY -c -q -f $CONF_FILE

if [ $? -ne 0 ]; then

echo "Errors found in configuration file."

return 1

fi

echo -n "Starting HAproxy: "

daemon $HAPROXY_BINARY -D -f $CONF_FILE -p $PID_FILE

RETVAL=$?

echo

[ $RETVAL -eq 0 ] && touch /var/lock/subsys/haproxy

return $RETVAL

}

stop() {

echo -n "Shutting down HAproxy: "

killproc haproxy -USR1

RETVAL=$?

echo

[ $RETVAL -eq 0 ] && rm -f /var/lock/subsys/haproxy

[ $RETVAL -eq 0 ] && rm -f $PID_FILE

return $RETVAL

}

restart() {

$HAPROXY_BINARY -c -q -f $CONF_FILE

if [ $? -ne 0 ]; then

echo "Errors found in configuration file, check it with ‘haproxy check‘."

return 1

fi

stop

start

}

check() {

$HAPROXY_BINARY -c -q -V -f $CONF_FILE

}

rhstatus() {

pid=$(pidof haproxy)

if [ -z "$pid" ]; then

echo "HAProxy is stopped."

exit 3

fi

status haproxy

}

condrestart() {

[ -e /var/lock/subsys/haproxy ] && restart || :

}

# See how we were called.

case "$1" in

start)

start

;;

stop)

stop

;;

restart)

restart

;;

reload)

restart

;;

condrestart)

condrestart

;;

status)

rhstatus

;;

check)

check

;;

*)

echo $"Usage: haproxy {start|stop|restart|reload|condrestart|status|check}"

RETVAL=1

esac

exit $RETVAL

5、检查HAProxy的配置是否正确:

# /etc/init.d/haproxy check

Configuration file is valid

【可能要经过如下处理:】

1). 【问题】

etc/init.d/haproxy check

-bash: /etc/init.d/haproxy: Permission denied

【解决】

cd /etc/init.d

chmod a+x haproxy

2). 【问题】

/etc/init.d/haproxy check

/etc/init.d/haproxy: line 70: /usr/local/bin/haproxy: No such file or directory

【解决】

cp /usr/local/sbin/haproxy /usr/local/bin/

三、Pacemaker + CoroSync安装和配置

【Pacemaker+Corosync+crmsh安装略】

1、先定义一些资源属性约束(包括禁止STONITH错误,忽略Quorum,防止资源在恢复之后移动等):

# crm configure

property stonith-enabled=false

property no-quorum-policy=ignore

rsc_defaults resource-stickiness=100

rsc_defaults failure-timeout=0

rsc_defaults migration-threshold=10

2、配置VIP资源:

crm(live)configure#

primitive api-vip ocf:heartbeat:IPaddr2 params ip=10.10.102.45 cidr_netmask=24 op monitor interval=5s

3、配置HAProxy资源:

crm(live)configure#

primitive haproxy lsb:haproxy op monitor interval="5s"

4、定义运行的HAProxy和VIP必须在同一节点上:

crm(live)configure#

colocation haproxy-with-vip INFINITY: haproxy api-vip

5、定义先接管VIP之后才启动HAProxy:

crm(live)configure#

order haproxy-after-IP mandatory: api-vip haproxy

6、验证并提交配置:

crm(live)configure# verify

crm(live)configure# commit

crm(live)configure# quit

7、资源状态查看

查看资源状态:

crm_mon -1 【可能有问题】

或

crm status

在机器1上查看:

/etc/init.d/haproxy status

haproxy (pid 1629) is running...

ip addr show eth0

2: eth0: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:67:ab:7e brd ff:ff:ff:ff:ff:ff

inet 10.10.102.6/24 brd 192.168.1.255 scope global eth0

inet 10.10.102.45/24 brd 192.168.1.255 scope global secondary eth0【此处会显示虚拟ip】

inet6 fe80::a00:27ff:fe67:ab7e/64 scope link

valid_lft forever preferred_lft forever

在机器2上查看:

/etc/init.d/haproxy status

HAProxy is stopped.

ip addr show eth0

2: eth0: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:67:ab:7e brd ff:ff:ff:ff:ff:ff

inet 10.10.102.7/24 brd 192.168.1.255 scope global eth0

inet6 fe80::a00:27ff:fe67:ab7e/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

从上面的这些信息,可以知道VIP绑定在机器1上,同时只有机器1上的HAProxy已经启动.

8、Failover测试

在pacemaker中standby正在运行资源的机器,观察集群资源在两台机器中间的转移。

【用到的命令】

crm node standby 机器名

crm status

crm node online 机器名

四、以keystone为例配置API服务

1、/etc/keystone/keystone.conf配置【如果是其他API服务应该修改认证地址,不修改绑定地址】

【貌似无需修改,都绑定监听自己的物理ip】

2、service endpoint配置【在一台机器上修改就行了,由于此时mysql已经实现HA,所以在任意一台机器上修改endpoint对两台机器都是全局有效的】

1) 以当前admin身份登录keystone

2)创建绑定vip的endpoint

keystone service-list

【记住service-list中keystone的service-id】

keystone endpoint-create --region RegionOne \

--service-id $(keystone service-list | awk ‘/ keystone / {print $2}‘) \

--publicurl "http://10.10.102.45:5000/v2.0" \

--internalurl "http://10.10.102.45:5000/v2.0" \

--adminurl http://10.10.102.45:35357/v2.0

【10.10.102.45为vip】

3)删除旧的endpoint

keystone endpoint-list

【记住旧的endpoint的id号】

keystone endpoint-delete 21a7b25a08d74882a711f09f0c313170

4)重启服务

service openstack-keystone restart

5)验证

export OS_AUTH_URL=http://10.10.102.45:35357/v2.0/

【10.10.102.45为vip】

keystone user-list(正常)

五、测试与验证:

由于API服务没有作为pacemaker的资源,所以必须在两台机器中的一台上手动停掉API服务,然后在其他机器上用VIP登录API服务

【例如,用到的命令如下】

service openstack-keystone stop

service openstack-keystone status

参考网站:

http://openstack.redhat.com/Load_Balance_OpenStack_API#HAProxy

http://openstack.redhat.com/RDO_HighlyAvailable_and_LoadBalanced_Control_Services 【附带Mysql和Qpid的HA配置】