spark之 连接SQL和HIVE

时间:2020-10-06 20:52:59

收藏:0

阅读:35

一、连接SQL

package com.njbdqn.linkSql import java.util.Properties import org.apache.spark.sql.SparkSession import org.apache.spark.sql._ object LinkSql { def main(args: Array[String]): Unit = { val spark = SparkSession.builder().appName("apptest").master("local[2]").getOrCreate() // 1.properties val prop = new Properties() prop.setProperty("driver","com.mysql.jdbc.Driver") prop.setProperty("user","root") prop.setProperty("password","root") // 2.jdbcDF show val jdbcDF = spark.read.jdbc("jdbc:mysql://192.168.56.111:3306/test","studentInfo",prop) jdbcDF.show(false) // 3.添加一行 import spark.implicits._ val df = spark.createDataFrame(spark.sparkContext.parallelize(Seq((90, "抖抖抖", "男", 23, "sdf", "sdfg@dfg"),(8, "抖33", "男", 23, "s444f", "sdfg@dfg")))) .toDF("sid","sname","sgender","sage","saddress","semail") // df.show(false) df.write.mode("append").jdbc("jdbc:mysql://192.168.56.111:3306/test","studentInfo",prop) } }

二、连接HIVE

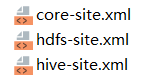

1.添加resources

2.代码

package com.njbdqn.linkSql import org.apache.spark.sql.SparkSession object LinkHive { def main(args: Array[String]): Unit = { val spark = SparkSession.builder().appName("apptest").master("local[2]") .enableHiveSupport() .getOrCreate() spark // .sql("show databases") .sql("select * from storetest.testhive") .show(false) } }

评论(0)