MySQL Scale Out

简介

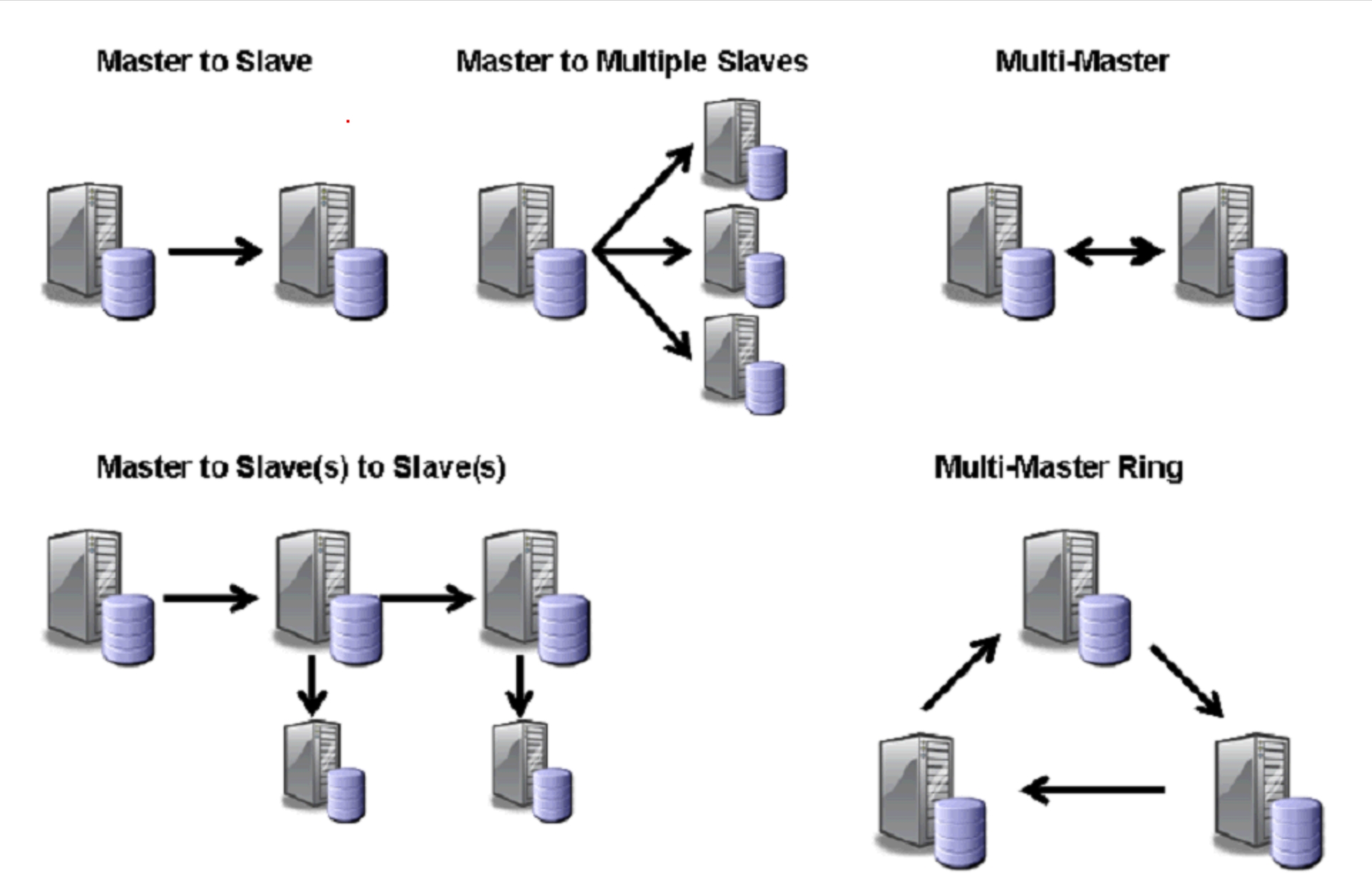

MySQL复制中较常见的复制架构有“一主一从”、“一主多从”、“双主”、“多级复制”和“多主环形机构”等,见下图;

最常用,也最灵活的就要数“一主多从”复制架构了,其能满足多种需求,如:

-

为不同的角色使用不同的备库(例如添加不同的索引或使用不同的存储引擎);

-

把一台备库当做待用的主库,除了复制没有其它数据传输;

-

将一台备库放在远程数据中心,用作灾难恢复;

-

延迟一个或多个备库,以备灾难恢复;

-

使用其中一个备库,作为备份、培训、开发或者测试使用服务器;

而“双主”复制架构则用于特殊的场景下,如两个处于不同地理位置的办公室,且都需要一份可写的数据拷贝;

这种架构最大的问题是如何解决数据冲突和不一致,尤其当两台服务器同时修改同一行记录,或同时在两台服务器上向一个包含auto_increment列的表里插入数据时;

而通过将一台服务器设置为只读的被动服务器,则可以很好的避免数据写入冲突的问题,这种主动-被动模式下的主-主复制架构使得反复切换主动和被动服务器非常方便,可以实现在不关闭服务器的情况下执行维护、优化表、升级操作系统或其他任务;

配置主动-被动模式的主-主复制架构的一般流程:

-

确保两台服务器上有相同的数据;

-

启用二进制日志,选择唯一的服务器ID,并创建复制账号;

-

启用备库更新的日志记录,这是故障转移和故障恢复的关键;

-

把被动服务器配置成只读,防止可能与主动服务器上的更新产生冲突;

-

启动每个服务器的MySQL实例;

-

将每个主库设置为对方的备库,使用新创建的二进制日志开始工作;

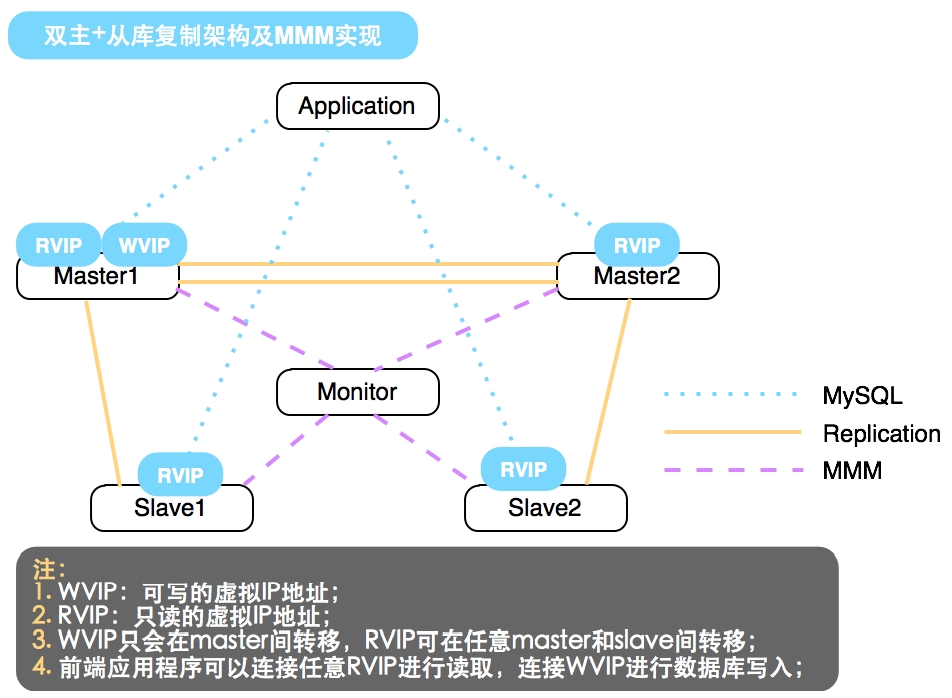

同时为了消除不同地理位置的站点单点故障问题,可以为每个主库增加冗余,即为每一个主库增加一个从库;

而MMM(=Master-Master Replication Manager for MySQL)则是一套脚本集合,用以监控、管理双主复制架构,通过设置一个可写的VIP和多个只读的VIP,完成故障自动转移、读负载分摊等功能;

架构设计

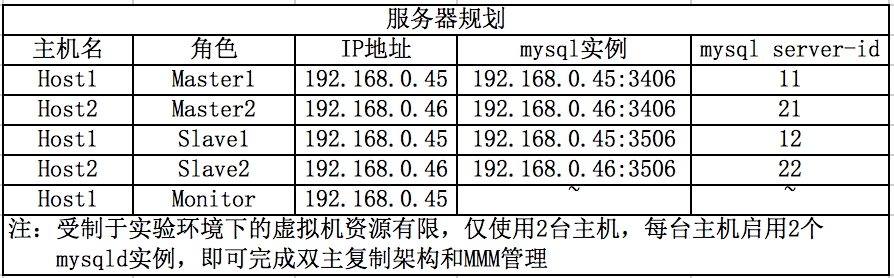

服务器规划

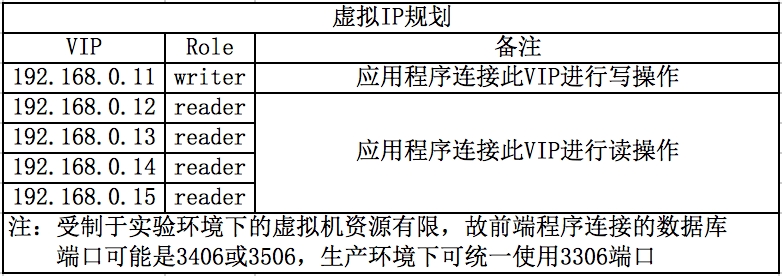

虚IP规划

配置部署

双主复制架构部署

MySQL或MariaDB的安装初始化可详见博客“MySQL架构”

利用mysqld_multi在一台主机上启动多个mysqld实例

数据库初始化

# 在主机Host1和Host2上 cd /usr/local/mysql scripts/mysql_install_db --user=mysql --datadir=/data/mariadb_data_3406/ scripts/mysql_install_db --user=mysql --datadir=/data/mariadb_data_3506/

数据库配置

# 在主机Host1上 vi /etc/my.cnf [mysqld_multi] mysqld = /usr/local/mysql/bin/mysqld_safe mysqladmin = /usr/local/mysql/bin/mysqladmin [mysqld1] port = 3406 socket = /tmp/mysql3406.sock skip-external-locking key_buffer_size = 256M max_allowed_packet = 1M table_open_cache = 256 sort_buffer_size = 1M read_buffer_size = 1M read_rnd_buffer_size = 4M myisam_sort_buffer_size = 64M thread_cache_size = 8 query_cache_size= 16M thread_concurrency = 2 datadir = /data/mariadb_data_3406 innodb_file_per_table = 1 default_storage_engine = InnoDB log-bin=mysql-bin relay-log=/data/relaylogs_3406/relay-bin # 指定中继日志路径 log_slave_updates=1 # 开启从库更新操作写入二进制日志功能 auto_increment_increment=2 # 双主复制中自增长字段的步长 auto_increment_offset=1 # 双主复制中自增长字段的起始值,此为1 sync_binlog = 1 # 可保证事务日志及时写入磁盘文件 binlog_format=row server-id = 11 # 注意server-id的唯一性 [mysqld2] port = 3506 socket = /tmp/mysql3506.sock skip-external-locking key_buffer_size = 256M max_allowed_packet = 1M table_open_cache = 256 sort_buffer_size = 1M read_buffer_size = 1M read_rnd_buffer_size = 4M myisam_sort_buffer_size = 64M thread_cache_size = 8 query_cache_size= 16M thread_concurrency = 2 datadir = /data/mariadb_data_3506 innodb_file_per_table = 1 default_storage_engine = InnoDB log-bin=mysql-bin relay-log=/data/relaylogs_3506/relay-bin log_slave_updates=1 sync_binlog = 1 binlog_format=row server-id = 12 # 在主机Host2上 vi /etc/my.cnf [mysqld_multi] mysqld = /usr/local/mysql/bin/mysqld_safe mysqladmin = /usr/local/mysql/bin/mysqladmin [mysqld1] port = 3406 socket = /tmp/mysql3406.sock skip-external-locking key_buffer_size = 256M max_allowed_packet = 1M table_open_cache = 256 sort_buffer_size = 1M read_buffer_size = 1M read_rnd_buffer_size = 4M myisam_sort_buffer_size = 64M thread_cache_size = 8 query_cache_size= 16M thread_concurrency = 2 datadir = /data/mariadb_data_3406 innodb_file_per_table = 1 default_storage_engine = InnoDB log-bin=mysql-bin relay-log=/data/relaylogs_3406/relay-bin log_slave_updates=1 auto_increment_increment=2 # # 双主复制中自增长字段的步长 auto_increment_offset=2 # 双主复制中自增长字段的起始值,此为2 sync_binlog = 1 binlog_format=row server-id = 21 [mysqld2] port = 3506 socket = /tmp/mysql3506.sock skip-external-locking key_buffer_size = 256M max_allowed_packet = 1M table_open_cache = 256 sort_buffer_size = 1M read_buffer_size = 1M read_rnd_buffer_size = 4M myisam_sort_buffer_size = 64M thread_cache_size = 8 query_cache_size= 16M thread_concurrency = 2 datadir = /data/mariadb_data_3506 innodb_file_per_table = 1 default_storage_engine = InnoDB log-bin=mysql-bin relay-log=/data/relaylogs_3506/relay-bin log_slave_updates=1 sync_binlog = 1 binlog_format=row server-id = 22

启动数据库实例

# 在主机Host1和Host2上 /etc/init.d/mysqld_multi start 1 # 停止服务操作是/etc/init.d/mysqld_multi stop 1 /etc/init.d/mysqld_multi start 2 # 停止服务操作是/etc/init.d/mysqld_multi stop 2

登录数据库

# 在主机Host1和Host2上 mysql -S /tmp/mysql3406.sock # 登录master1或master2 mysql -S /tmp/mysql3506.sock # 登录slave1或slave2

创建所需账户(在Master1实例上)

grant replication client on *.* to ‘3m_moni‘@‘192.168.0.%‘ identified by ‘3m_12345‘; # 创建MMM的监控账户 grant super,replication client,process on *.* to ‘3m_agen‘@‘192.168.0.%‘ identified by ‘3m_12345‘; # 创建MMM的代理账户 grant replication slave on *.* to ‘3m_repl‘@‘192.168.0.%‘ identified by ‘3m_12345‘; # 创建复制账户

配置数据同步

# 每次从库连接主库前,需先查询对应主库的二进制日志文件及其事件位置,即在主库上执行show master status即可,据此决定从库连接时的master_log_file和master_log_pos参数; # slave1实例上 change master to master_host=‘192.168.0.45‘,master_port=3406,master_user=‘3m_repl‘,master_password=‘3m_12345‘,master_log_file=‘mysql-bin.000001‘,master_log_pos=2448; # master2实例上 change master to master_host=‘192.168.0.45‘,master_port=3406,master_user=‘3m_repl‘,master_password=‘3m_12345‘,master_log_file=‘mysql-bin.000002‘,master_log_pos=365; # slave2实例上 change master to master_host=‘192.168.0.46‘,master_port=3406,master_user=‘3m_repl‘,master_password=‘3m_12345‘,master_log_file=‘mysql-bin.000004‘,master_log_pos=342; # master1实例上 change master to master_host=‘192.168.0.46‘,master_port=3406,master_user=‘3m_repl‘,master_password=‘3m_12345‘,master_log_file=‘mysql-bin.000004‘,master_log_pos=342;

查看同步状态

# 重点检查Slave_IO_Running、Slave_SQL_Running和Master_Server_Id等参数 MariaDB [(none)]> show slave status\G *************************** 1. row *************************** Slave_IO_State: Waiting for master to send event Master_Host: 192.168.0.45 Master_User: 3m_repl Master_Port: 3406 Connect_Retry: 60 Master_Log_File: mysql-bin.000005 Read_Master_Log_Pos: 326 Relay_Log_File: relay-bin.000010 Relay_Log_Pos: 613 Relay_Master_Log_File: mysql-bin.000005 Slave_IO_Running: Yes Slave_SQL_Running: Yes Master_Server_Id: 11

MMM安装部署

Host1主机上:部署agent和monitor

yum -y install mysql-mmm-* # 配置公共设置 vi /etc/mysql-mmm/mmm_common.conf active_master_role writer <host default> cluster_interface eth0 pid_path /var/run/mysql-mmm/mmm_agentd.pid bin_path /usr/libexec/mysql-mmm/ replication_user 3m_repl# 复制账户 replication_password 3m_12345# 复制账户密码 agent_user 3m_agen# agent账户 agent_password 3m_12345# agent账户密码 </host> <host db1> ip 192.168.0.45 mysql_port 3406# 可指定需连接的mysqld的端口 mode master peer db2# peer表示db1、db2是同等级别的 </host> <host db2> ip 192.168.0.46 mysql_port 3406 mode master peer db1 </host> <host db3> ip 192.168.0.45 mysql_port 3506 mode slave </host> <host db4> ip 192.168.0.46 mysql_port 3506 mode slave </host> <role writer> hosts db1, db2 ips 192.168.0.11# 可写VIP只配置一个 mode exclusive# 表示排它 </role> <role reader> hosts db1, db2,db3,db4 ips 192.168.0.12,192.168.0.13,192.168.0.14,192.168.0.15 # 只读VIP可配置多个 mode balanced# 表示可以共用 </role> ========== scp mmm_common.conf 192.168.0.46:/etc/mysql-mmm/ # 将公共配置文件拷贝至其它主机 ========== # 配置监控设置 vi /etc/mysql-mmm/mmm_mon.conf include mmm_common.conf <monitor> ip 127.0.0.1 pid_path /var/run/mysql-mmm/mmm_mond.pid bin_path /usr/libexec/mysql-mmm status_path /var/lib/mysql-mmm/mmm_mond.status ping_ips 192.168.0.45,192.168.0.46# 健康监测时需ping的主机IP,不是VIP哦 auto_set_online 60 </monitor> <host default> monitor_user 3m_moni# 监控账户 monitor_password 3m_12345 # 监控账户密码 </host> debug 0 # 配置agent设置 vi /etc/mysql-mmm/mmm_agent.conf include mmm_common.conf this db1# 因为在一台主机上启用了2个mysqld实例,故可配置2个this参数哦 this db3

Host2主机上:只需部署agent

yum -y install mysql-mmm-agent # 配置agent设置 vi /etc/mysql-mmm/mmm_agent.conf include mmm_common.conf this db2 this db4

服务启动

# 在主机Host1上 [root@mysql mysql-mmm]# mmm_control show db1(192.168.0.45) master/ONLINE. Roles: reader(192.168.0.14), writer(192.168.0.11) db2(192.168.0.46) master/ONLINE. Roles: reader(192.168.0.13) db3(192.168.0.45) slave/ONLINE. Roles: reader(192.168.0.15) db4(192.168.0.46) slave/ONLINE. Roles: reader(192.168.0.12)

测试验证

查看双主复制架构中基于MMM实现的状态信息:

# 在主机Host1上 [root@mysql mysql-mmm]# mmm_control show db1(192.168.0.45) master/ONLINE. Roles: reader(192.168.0.14), writer(192.168.0.11) db2(192.168.0.46) master/ONLINE. Roles: reader(192.168.0.13) db3(192.168.0.45) slave/ONLINE. Roles: reader(192.168.0.15) db4(192.168.0.46) slave/ONLINE. Roles: reader(192.168.0.12)

手动进行各节点的健康监测

# 在主机Host1上 [root@mysql mysql-mmm]# mmm_control checks db4 ping [last change: 2014/05/06 22:38:27] OK db4 mysql [last change: 2014/05/06 22:38:27] OK db4 rep_threads [last change: 2014/05/06 22:38:27] OK db4 rep_backlog [last change: 2014/05/06 22:38:27] OK: Backlog is null db2 ping [last change: 2014/05/06 22:38:27] OK db2 mysql [last change: 2014/05/06 22:38:27] OK db2 rep_threads [last change: 2014/05/06 22:38:27] OK db2 rep_backlog [last change: 2014/05/06 22:38:27] OK: Backlog is null db3 ping [last change: 2014/05/06 22:38:27] OK db3 mysql [last change: 2014/05/06 22:38:27] OK db3 rep_threads [last change: 2014/05/06 22:38:27] OK db3 rep_backlog [last change: 2014/05/06 22:38:27] OK: Backlog is null db1 ping [last change: 2014/05/06 22:38:27] OK db1 mysql [last change: 2014/05/06 22:38:27] OK db1 rep_threads [last change: 2014/05/06 22:38:27] OK db1 rep_backlog [last change: 2014/05/06 22:38:27] OK: Backlog is null

补充说明

-

在本篇的演示案例中,前端程序若要与MySQL通信,则写库需连接192.168.0.11:3406,读库可连接192.168.0.12-15中的一个或多个,端口可能是3406或3506;

-

在只读VIP漂移时,会导致前端程序连接的mysqld端口发生变化,所以生产环境下还是统一使用3306端口为宜;

-

利用MMM实现了双主复制架构中的故障自动转移后,mysql并非直接与前端程序通信,还需配合使用读写分离器(如Ameoba),以统一对外的连接地址,由读写分离器负责读写的向下分配;